How To Improve Your Low Latency in Video Streaming? (Quick Tips)

You might be wondering what does the term latency mean? In layman’s terms, low latency video is the time delay between input and output, also known as lagging. In short, latency is the culprit when a game is on and you hear your neighbor cheer for a boundary while you’re still waiting for the player to hit the ball.

In other words, we can say that latency is responsible for those three awkward seconds when a reporter on the scene stares into the camera before answering the anchor’s question. That said, latency can vary and low-latency networks can reduce this delay to a much greater extent.

“If your audience frequently watches live video content, then latency can have a significant impact on their video viewing experience.”

Ultra low latency video streaming is especially important in interactive live video streaming platform as high latency can have a significant impact on end users’ experience. In fact, low latency has become a major selling point in an increasingly competitive market.

Providing a low latency streaming platform to your audience has become an essential strategy for staying ahead of the competition. With that being said, let’s dive into what low latency video streaming is and how low-latency video streaming services could actually help any online video platform in the long run.

So, let us get started!!!

Table of Contents

What is Low Latency Video Streaming?

Low latency streaming is when your audiences are able to watch the live streaming within five seconds. Yes! You heard right. The live-streamed video reaches your viewers in less than five seconds; if it is in the case of conferencing, the latency is near real-time, thanks to the development in technology.

Quality can be 4k or HD, but if the video is delivered to your audience later, it may cause issues. The importance of balancing quality and latency cannot be overstated. Whether it’s screen-to-screen interactions, interactive streaming, remote operations with video streams, online video gaming, or video calling, keeping latency low is unquestionably important in today’s technological world.

Among the several video streaming service providers, only a handful of top OTT platform providers are able to deliver absolute low latency video streaming to their desired audience.

Why Is Video Latency An Issue?

When it comes to video-on-demand, latency is not an issue, but when it comes to real-time communication or live streaming, this delay becomes problematic. Virtual events, online education, webinars, and all-hands meetings all require as close to real-time video streaming with low latency.

According to ABI research, live streaming will grow 10% to 91 million subscribers by 2024. Despite this, many OTT platform providers looking to enter the live streaming market face latency challenges. The good news is that some of the top OTT platform providers make the most of cutting-edge technologies to provide the audience with the lowest latency video streaming possible.

How Does Low-Latency Streaming Work?

Now that you understand what low latency is and why it is critical for any online video streaming platform, you may be wondering how you can deliver lightning-fast streams with the lowest possible video latency.

Low-latency video streaming, like most things in life, has tradeoffs. To find the right mix for you, you must balance three factors:

- The efficiency of encoding and device/player compatibility.

- Geographic distribution and audience size.

- Video complexity and resolution.

To be frank, the streaming protocol you select has a significant impact, so let’s look into that.

A streaming protocol is a set of rules that defines how data communicates across the Internet from one device or system to another. The method of segmenting a video stream into smaller chunks for easier transmission was standardized by video streaming protocols.

Businesses all over the globe are confident that HTTP-based Low or Ultra Low Latency streaming protocols will ultimately triumph in this battle. The reasons are quite simple: these protocols require much less work to implement in legacy infrastructure, benefit from more support across devices and browsers, scale more easily than WebRTC, and can be adopted by a greater number of companies due to lower licensing fees.

This is why we developed an end-to-end HLS video player capable of providing an unparalleled low latency video streaming experience. We believe that, in the not-too-distant future, HLS will be the single streaming protocol capable of meeting all video streaming use cases.

Also read – How adaptive bitrate streaming optimized for user experience?

What Causes Low Latency In Video Streaming?

Latency is caused not only by the speed with which a signal travels from point A to point B, but also by the time it takes to process video from raw camera input to encoded and decoded video streams. Depending on your delivery chain and the number of video processing steps involved, a variety of factors can contribute to video latency. While these delays may seem insignificant on their own but they quickly add up. Some of the main causes of latency are described in the below section. Do check it out.

1. Poor Internet Connection:

When it comes to video latency, one of the most common causes could be a poor internet connection. If the connection is slow or unstable, the video may tend to lag or buffer, ruining the overall video experience.

2. Low-Quality Hardware:

Another potential source of latency could be due to faulty hardware. This includes a video camera, encoder, and decoder. If any of these components is underpowered, latency occurs, making real-time video streaming difficult to enjoy.

3. Weak Encoding Process:

The encoding process is in charge of compressing video data in order to send video signals over the internet. If this process is not carried out correctly, video quality may suffer and latency may increase exponentially.

4. Distance:

The distance between the audience and the video source can also have an impact on the overall latency. The greater the distance, the longer it will take for the signal to reach the viewer. As a result, streaming video from another country usually gets delayed to some extent.

5. Server Load:

The server load is directly proportional to the number of users watching the video, which can have a greater impact on the overall latency. In layman’s terms, if the video lags during a live match, it means that the server is overloaded.

Looking To Build Your Own Online Video Platform?

Start and Grow Your Video Streaming Service With 1000+ Features & 9+ Revenue Models.

Highly Customizable

Highly Customizable Life Time Ownership

Life Time Ownership Own 100% of Your Revenue

Own 100% of Your Revenue

Full-Branding Freedom

Full-Branding Freedom

6. Streaming Protocol Issues:

The streaming protocol can also cause video latency. Delays may occur if the streaming protocol is not optimized for dynamic adaptive streaming.

7. CDN Issues:

A video content delivery network (CDN) is a computer system that delivers content to viewers. High latency video streaming can be caused by a malfunctioning CDN. For best results, it is recommended to use the best CDNs for video streaming.

How Does Latency Affect Streaming Video Platform?

When the time it takes for a video to reach its audience and be received back at the other end is longer, the overall quality of the streaming output suffers. If you are a true cricket fan who enjoys watching the game while it is being played on a ground thousands of kms away, you will be disappointed when the final results are streamed minutes after the event has occurred.

Similarly, your viewers do expect a glass-to-glass experience when they watch your live stream. Some of the common causes of latency in video streaming are given in the following section.

1. Synchronizing:

High latency can cause the video and audio to be out of sync, which is one of the most common problems. This is due to the fact that audio signals travel faster than video signals. As a result, the audio frequently precedes the video, which can cause issues with lip-syncing and real-time video streaming.

2. Buffering:

Another common issue is that latency can cause the video to buffer or pause while it loads. Viewers may become irritated as they wait for the video to catch up. Most of the time, the audience may leave the video in the middle and never return to it.

Recommended Reading

3. Low Video Quality:

In some cases, latency can degrade the overall video quality. This is due to the video signal being compressed more than necessary to reduce latency. As it turns out, the video may appear pixelated or blurry.

4. Rewind And Fast-Forwarding:

Latency can also make it difficult to rewind or fast-forward through a video. This is due to the fact that the video data must return to the viewer before proceeding.

Data Speaks More Than Anything – According to a recent study, the majority of video streams still lag behind cable broadcasts in terms of delivery speed, which is unfortunate. On the other hand, some of the top OTT streaming solution providers offer a crystal-clear glass-to-glass experience with noticeably lower latency, which is great news for streamers who want to keep their audience cheering.

How To Overcome Low Latency Video Streaming?

There are several methods for reducing video latency without sacrificing much on the picture quality. The first step is to select a hardware encoder and decoder combination designed to minimize latency, even when using a standard internet connection.

The most recent generation of video encoders and decoders can maintain low latency (as low as 50ms in some cases) and enough processing power to use HEVC to compress video to extremely low bitrates (as low as 3 Mbps) while maintaining high picture quality.

How to Choose the Right Low Latency Streaming Service for Your Needs?

Now that you have a better understanding of low-latency video streaming, you need to select a suitable low latency streaming platform, taking into consideration the protocols that they support.

1. Standard Video Latency (+20 seconds)

If high latency won’t degrade your audience’s streaming experience, then use standard HLS (HTTP Live Streaming) or DASH (Dynamic Adaptive Streaming over HTTP) protocols. These protocols are excellent for VOD or live events with large audiences who do not interact much. Also, this type of streaming allows for HD or 4K video quality at a massive scale.

2. Reduced Video Latency (20-5 seconds)

By optimizing your streaming chain, you can further reduce your standard latency (HLS or DASH protocols) to under 20 seconds. You’ll get closer to the 5-second mark if you use RTMP (Real Time Messaging Protocol). Above all, look for tuned HLS or DASH protocols for time-sensitive streams that do not allow audience interaction.

3. Low Latency Video (5-1 seconds)

To further reduce latency, multiple solutions must be added and combined. Low-latency video streaming protocols include LLHLS, LLDASH, SRT, and RTP/RTSP. Great for any stream that needs to match cable TV’s 5-second content delivery.

4. Near-Real-Time Or Ultra-Low Video Latency (-1 second)

Ultra-low video latency is considered to be real-time video streaming, which means there will be no longer any delay. If you want to deliver competitive live streams with sub-second latency, you’ll require WebRTC (or web real-time communication). This is essential in situations requiring two-way communication, such as online meetings and virtual classrooms.

To Sum Up

Low latency video streaming is an important factor that can help you on multiple levels, from providing a better customer experience to increasing customer engagement. At the same time, this is frequently overlooked by most streaming companies, particularly new entrants. A buffer-free streaming experience is one of the most important factors to consider if you want your video streaming business to be successful in the long run.

Customers always want the best service, and their expectations are constantly rising. To be frank, we all expect lightning-fast load times and smooth video playback when streaming on-demand videos. What may be less obvious is that, whether we realize it or not, that expectation from the end user is gradually transferring to real-time video communication and live streaming applications as well.

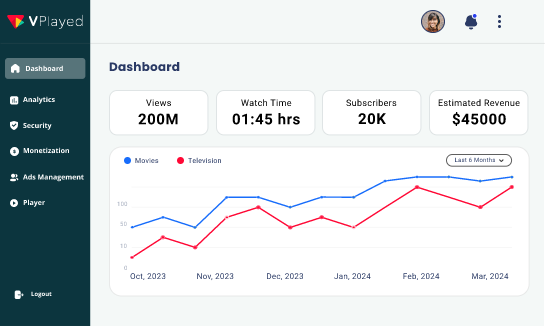

VPlayed is obsessed with providing the best developer and viewer experience for the end user. So it’s no surprise that we spend a lot of time thinking about and staying current on video protocol development in order to provide business owners with the best video on-demand solutions and ultra low latency video streaming platform.

“Get ready to deliver ultra low latency video streaming with your own customized OTT platform”

Frequently Asked Questions (FAQs):

Video streaming latency is the delay between the produced content and when it reaches the viewer. Lower latency is crucial for users as it ensures real-time interactions, reduces buffering, and enhances the overall streaming experience.

Low latency is vital as it minimizes delays, providing users with a more responsive and engaging viewing experience. It is especially important to stream live events and interactive content.

To reduce latency in video streaming, you can employ advanced video codecs, optimize encoding parameters, and strategically utilize Content Delivery Networks (CDNs). In addition, implementing chunked streaming, where content is divided into smaller segments, and adopting protocols like WebRTC, designed for real-time communication, can contribute to reduced latency and a more responsive streaming experience.

High latency often stems from encoding delays during content production, network congestion leading to slower data transfer, inefficient streaming protocols causing delays in content delivery, and excessive buffering as a result of inadequate bandwidth.

Low latency leads to immediate content delivery, reducing buffering and enabling seamless interactions. In other words, it is ideal for live events, gaming, and other applications where real-time engagement is essential.